Hello there!

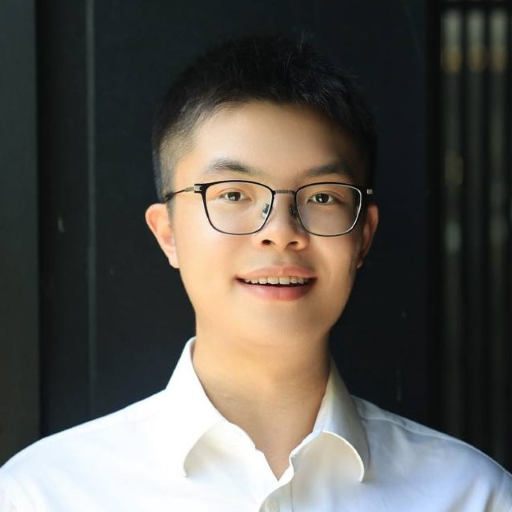

Welcome to Kunjun Li’s website!

I’m a final-year undergraduate student at National University of Singapore (NUS). Currently, I am a research intern at Princeton University, supervised by Prof. Zhuang Liu. I also collaborate closely with Prof. Jenq-Neng Hwang from UW and Prof. Xinchao Wang from NUS.

My research focuses on Efficient Machine Learning, particularly optimizing training and inference of LLMs, Diffusion and Multimodal

Models. I have been working on sparse attention, network pruning and efficient architectures. My work strives to

achieve computational breakthroughs, making deep learning affordable and accessible to everyone, everywhere.

🔥 News

📝 Publications

NeurIPS 2025

Memory-Efficient Visual Autoregressive Modeling with Scale-Aware KV Cache

NeurIPS 2025

Kunjun Li, Zigeng Chen, Cheng-Yen Yang, Jenq-Neng Hwang

- Scale-Aware KV cache tailored for next-scale prediction paradigm in VAR.

- Lossless Compression while achieving 90% memory reduction (85 GB → 8.5 GB) and substantial speedup.

- Facilitating the scaling of VAR models to ultra-high resolutions like 4K.

[paper]

[code]

[abstract]

Visual Autoregressive (VAR) modeling has garnered significant attention for its innovative next-scale prediction approach, which yields substantial improvements in efficiency, scalability, and zero-shot generalization. Nevertheless, the coarse-to-fine methodology inherent in VAR results in exponential growth of the KV cache during inference, causing considerable memory consumption and computational redundancy. To address these bottlenecks, we introduce ScaleKV, a novel KV cache compression framework tailored for VAR architectures. ScaleKV leverages two critical observations: varying cache demands across transformer layers and distinct attention patterns at different scales. Based on these insights, ScaleKV categorizes transformer layers into two functional groups: drafters and refiners. Drafters exhibit dispersed attention across multiple scales, thereby requiring greater cache capacity. Conversely, refiners focus attention on the current token map to process local details, consequently necessitating substantially reduced cache capacity. ScaleKV optimizes the multi-scale inference pipeline by identifying scale-specific drafters and refiners, facilitating differentiated cache management tailored to each scale. Evaluation on the state-of-the-art text-to-image VAR model family, Infinity, demonstrates that our approach effectively reduces the required KV cache memory to 10% while preserving pixel-level fidelity.

CVPR 2025

TinyFusion: Diffusion Transformers Learned Shallow

CVPR 2025 Highlighted Paper (3%)

Gongfan Fang*, Kunjun Li*, Xinyin Ma, Xinchao Wang (Co-First Author)

- End-to-end learnable depth pruning framework for Diffusion Transformers with 50% model parameters and depth.

- Achieveing a 2x faster inference with comparable performance.

- Tiny DiTs at 7% of the original training costs.

[paper]

[code]

[abstract]

Diffusion Transformers have demonstrated remarkable capabilities in image generation but often come with excessive parameterization, resulting in considerable inference overhead in real-world applications. In this work, we present TinyFusion, a depth pruning method designed to remove redundant layers from diffusion transformers via end-to-end learning. The core principle of our approach is to create a pruned model with high recoverability, allowing it to regain strong performance after fine-tuning. To accomplish this, we introduce a differentiable sampling technique to make pruning learnable, paired with a co-optimized parameter to simulate future fine-tuning. While prior works focus on minimizing loss or error after pruning, our method explicitly models and optimizes the post-fine-tuning performance of pruned models. Experimental results indicate that this learnable paradigm offers substantial benefits for layer pruning of diffusion transformers, surpassing existing importance-based and error-based methods. Additionally, TinyFusion exhibits strong generalization across diverse architectures, such as DiTs, MARs, and SiTs. Experiments with DiT-XL show that TinyFusion can craft a shallow diffusion transformer at less than 7% of the pre-training cost, achieving a 2times speedup with an FID score of 2.86, outperforming competitors with comparable efficiency.

IPSN 2024

PixelGen: Rethinking Embedded Camera Systems for Mixed-Reality

ACM/IEEE IPSN 2024 Best Demonstration Runner-Up

Kunjun Li, Manoj Gulati, Dhairya Shah, Steven Waskito, Shantanu Chakrabarty and Ambuj Varshney

- Generate High Resolution RGB images from Monochrome and sensor data.

- Novel representation of the surroundings from invisible signal.

[paper]

[code]

[abstract]

A confluence of advances in several fields has led to the emergence of mixed-reality headsets. They can enable us to interact with and visualize our environments in novel ways. Nonetheless, mixed-reality headsets are constrained today as their camera systems only capture a narrow part of the visible spectrum. Our environment contains rich information that cameras do not capture. It includes phenomena captured through sensors, electromagnetic fields beyond visible light, acoustic emissions, and magnetic fields. We demonstrate our ongoing work, PixelGen, to redesign cameras for low power consumption and to be able to visualize our environments in a novel manner, making some of the invisible phenomena visible. Pixel-Gen combines low-bandwidth sensors with a monochrome camera to capture a rich representation of the world. This design choice ensures information is communicated energy-efficiently. This information is then combined with diffusion-based image models to generate unique representations of the environment, visualizing the otherwise invisible fields. We demonstrate that together with a mixed reality headset, it enables us to observe the world uniquely.